Executing a rule

To execute a rule, along with its associated tasks, you are required to provide all inputs, including ApplicationType details, CredentialTypes, and any user inputs, within a file named inputs.yaml. This file is situated in your rule folder.

In the case of Python, it's essential to specify any third-party library dependencies within the requirements.txt file located in each of your task folders. As an illustration, for the GitHub use case, you need to include these two Python libraries, as suggested below.

aiohttp==3.8.3

PyGithub

Unit testing instructions (if you wish to test each task individually)

When you are unit testing a specific task, the inputs.yaml file located in your rule folder will be disregarded. Hence, it is imperative to modify all inputs within the inputs.yaml located in your task folder for accurate testing. It's worth noting that when executing an entire rule, the inputs.yaml files within your task folders will not be taken into account.

To perform individual task testing, execute either the autogenerated_main.go (for Go) or autogenerated_main.py (for Python) file located in your task folder.

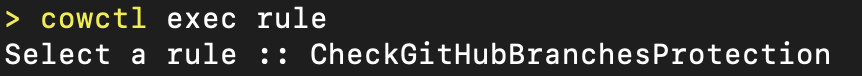

Use the exec rule command to execute the rule, which will prompt you to select the rule.

You will be presented with a comprehensive list of available rules, which includes entries from both the local catalog and the global catalog. Once you choose the rule, its execution will begin, allowing you to view the progress.

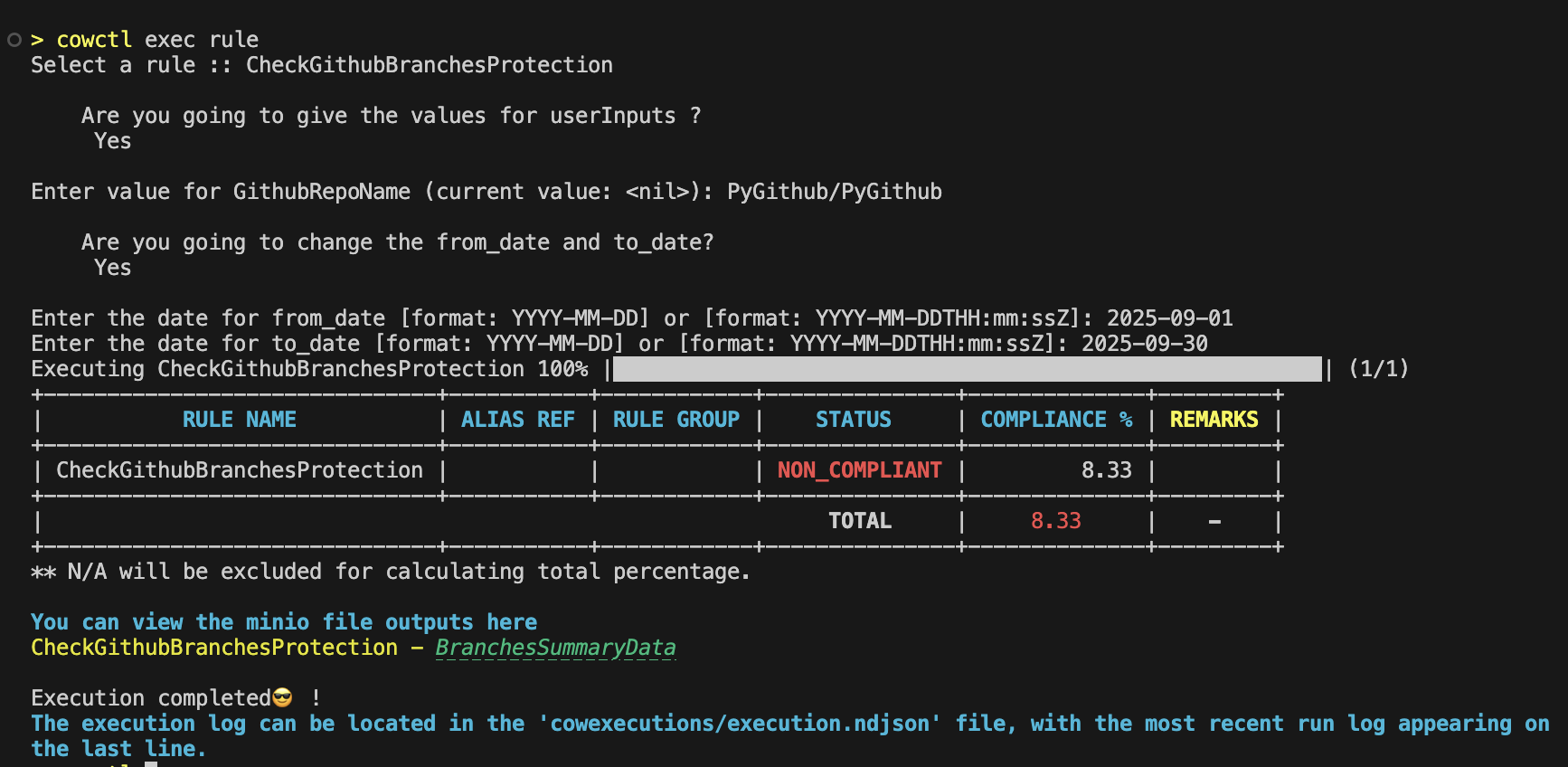

Upon executing a rule, it generates an output that furnishes valuable information according to the configuration outlined in the rule.yaml file situated within the rule folder. This output typically encompasses the following details:

- Compliance Status: This indicates the outcome of the rule execution regarding compliance with the specified criteria, which can be categorized as either COMPLIANT, NON_COMPLIANT, or NOT_DETERMINED.

- Compliance Percentage: If the calculation of the compliance percentage is integrated into the task logic, it will be included in the output. This percentage serves as a quantitative measure, quantifying the extent to which compliance has been achieved and providing a clear indicator of the rule's adherence.

During rule execution in OpenSecurityCompliance, you are prompted to provide input values interactively such as:

-

String or Simple Inputs: Direct values, such as repository names (GithubRepoName), identifiers, or other textual information. You will be prompted to enter these during execution.

-

File Inputs: Rules can also accept file inputs. To test this locally, upload the file to Minio (http://localhost:9001) and when prompted, provide the file path as the value.

-

Control Period: The from_date and to_date define the control period for the rule execution. You will be prompted to provide these dates to ensure the rule runs over the correct timeframe.

Troubleshooting

If any errors occur, you can readily review the execution logs in Minio, accessible at http://localhost:9001/. To log in, use the username and password that you've set up in the etc/policycow.env configuration. The path to the log file in Minio is specified within the cowexecutions/execution.ndjson file (in the last line).

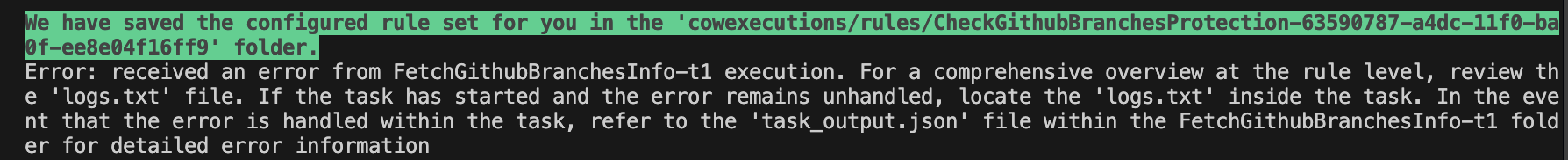

If an unexpected error occurs during rule execution, you’ll see a message like the one shown below:

This indicates that the rule encountered an issue during execution. To investigate further:

- Open the corresponding rule execution folder mentioned in the message.

- Review the logs.txt file for detailed task-level logs.

- If the error is handled within the task, check the task_output.json file for additional context and error details.

Debugging a task locally

Python

Follow these steps:

- Install local packages using the

install_cow_packages.shscript in the main folder. - In the debug configuration of python task in

{{repo}}/.vscode/launch.jsonfile ("name": "Python: task")- Pass the env file path in "envFile" attribute.

- Pass any other env values that are not present in the env file as key value pairs under "env" attribute.

- If your task requires you to upload to or download files from minio, update the env

MINIO_LOGIN_URLin{{repo}}/etc/policycow.envas shown here: MINIO_LOGIN_URL=localhost:9000 - Select the autogenerated_main.py file in the task folder and start debugging.

Note: Undo the change done to MINIO_LOGIN_URL env, once the local testing is done. Else, the command exec rule would fail.